This is an austere implementation of mining tweets using R. I will work on making it better sometime in the future (once I engulf R data structures more).

Apparently, on Windows system the authentication requires an extra step. Thanks to this blog post for clarifying that. See below.

After following the link provided, allowing access to the Twitter app and copying the security key produced back in R, everything was all set. To check:

Although it generates the cloud, at this point, it is not effective enough for analysis. One way to make it better is to get rid of common English words such as "and", "but", etc. Secondly, getting rid of Unicode and non-English languages would be an improvement also. And, there is MORE that needs to be done.

Hopefully soon.

#AFC

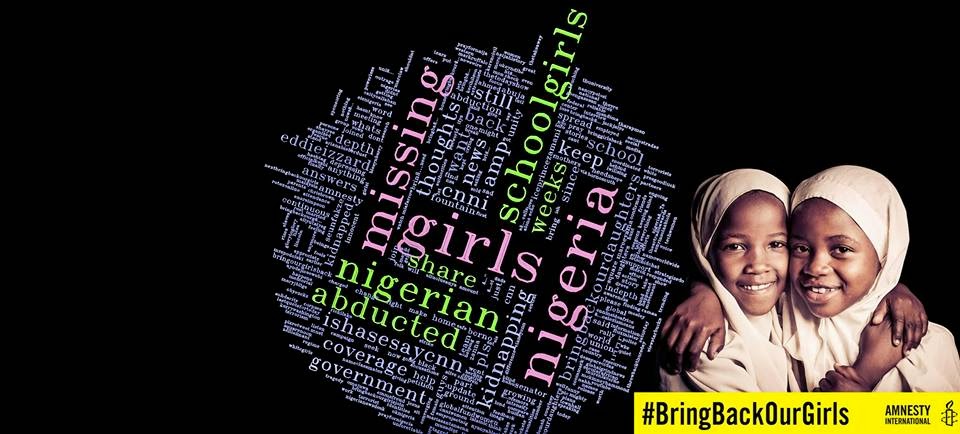

After changing the font, inverting the color and messing around in Photoshop:

Connecting to Twitter API

The first step was to get consumer key and consumer secret from Twitter. This is used for authentication purposes. To get these, I just created a new app on Twitter.Apparently, on Windows system the authentication requires an extra step. Thanks to this blog post for clarifying that. See below.

library("ROAuth") library("twitteR") #necessary step for Windows download.file(url="http://curl.haxx.se/ca/cacert.pem", destfile="cacert.pem") #to get your consumerKey and consumerSecret see the twitteR documentation for instructions credentials <- OAuthFactory$new (consumerKey='#################...', consumerSecret='#################...', requestURL='https://api.twitter.com/oauth/request_token', accessURL='https://api.twitter.com/oauth/access_token', authURL='https://api.twitter.com/oauth/authorize') credentials$handshake(cainfo="cacert.pem") save(credentials, file="twitter authentication.Rdata") registerTwitterOAuth(credentials)

After following the link provided, allowing access to the Twitter app and copying the security key produced back in R, everything was all set. To check:

> registerTwitterOAuth(credentials) [1] TRUE

Tweet Cloud

library(tm) library(wordcloud) tag<- searchTwitter("#AFC", n=100, cainfo="cacert.pem") df <- do.call("rbind", lapply(tag, as.data.frame)) twitterCorpus <- Corpus(VectorSource(df$text)) twitterCorpus <- tm_map(twitterCorpus, tolower) # remove punctuation twitterCorpus <- tm_map(twitterCorpus, removePunctuation) # remove numbers twitterCorpus <- tm_map(twitterCorpus, removeNumbers) tdm <- TermDocumentMatrix(twitterCorpus, control = list(minWordLength = 1)) m <- as.matrix(tdm) # calculate the frequency of words v <- sort(rowSums(m), decreasing=TRUE) words <- names(v) d <- data.frame(word=words, freq=v) wordcloud(d$word, d$freq, min.freq=3)

Result

#AFC

Although it generates the cloud, at this point, it is not effective enough for analysis. One way to make it better is to get rid of common English words such as "and", "but", etc. Secondly, getting rid of Unicode and non-English languages would be an improvement also. And, there is MORE that needs to be done.

Hopefully soon.

Soon

I just learnt that the common words mentioned above are referred as stop-words. And, it is pretty easy to handle them. After removing them and adding some colors the clouds look more beautiful. I also got rid of all words with substring "http" (maybe not in the most efficient way.. I will have to look into it).

# First of all make sure the connection to twitter is authenticated. # see file twiiterconnection.R # # if that has been done previously and this is a new session, open .Rdata # that has stored credentials # #if registerTwitterOAuth(credentials) is TRUE, you are good to go library(tm) library(wordcloud) library(ROAuth) library(twitteR) # check connection registerTwitterOAuth(credentials) tag<- searchTwitter("#MH370", n=700, lang= "en", cainfo="cacert.pem") df <- do.call("rbind", lapply(tag, as.data.frame)) twitterCorpus <- Corpus(VectorSource(df$text)) tdm <- TermDocumentMatrix( twitterCorpus, control = list(minWordLength = 1, removePunctuation = TRUE, removeNumbers = TRUE, stopwords = TRUE, tolower=TRUE)) m <- as.matrix(tdm) # frequency of words frequency <- sort(rowSums(m), decreasing=TRUE) words <- names(frequency) # get rid of words that contains "http" # hopefully there is a better way to do it words[which(grepl("http",names(frequency)))] <- "" d <- data.frame(word=words, freq=frequency) #save the image in png format png("mh370.png", width=12, height=12, units="in", res=300) wordcloud(d$word, d$freq,scale=c(10,.4),min.freq=10, max.words=Inf, random.order=FALSE, rot.per=.3, colors=brewer.pal(8, "Dark2")) dev.off()

Result

wordcloud(d$word, d$freq,scale=c(8,.1),min.freq=7, max.words=Inf, random.order=FALSE, rot.per=.3, vfont=c("gothic english","plain"), colors=brewer.pal(8, "Dark2"))

After changing the font, inverting the color and messing around in Photoshop: